Murder in the Smart Home – Inspector Columbo investigates!

2 March 1975: "Replay" – Columbo investigates Oskar Werner!

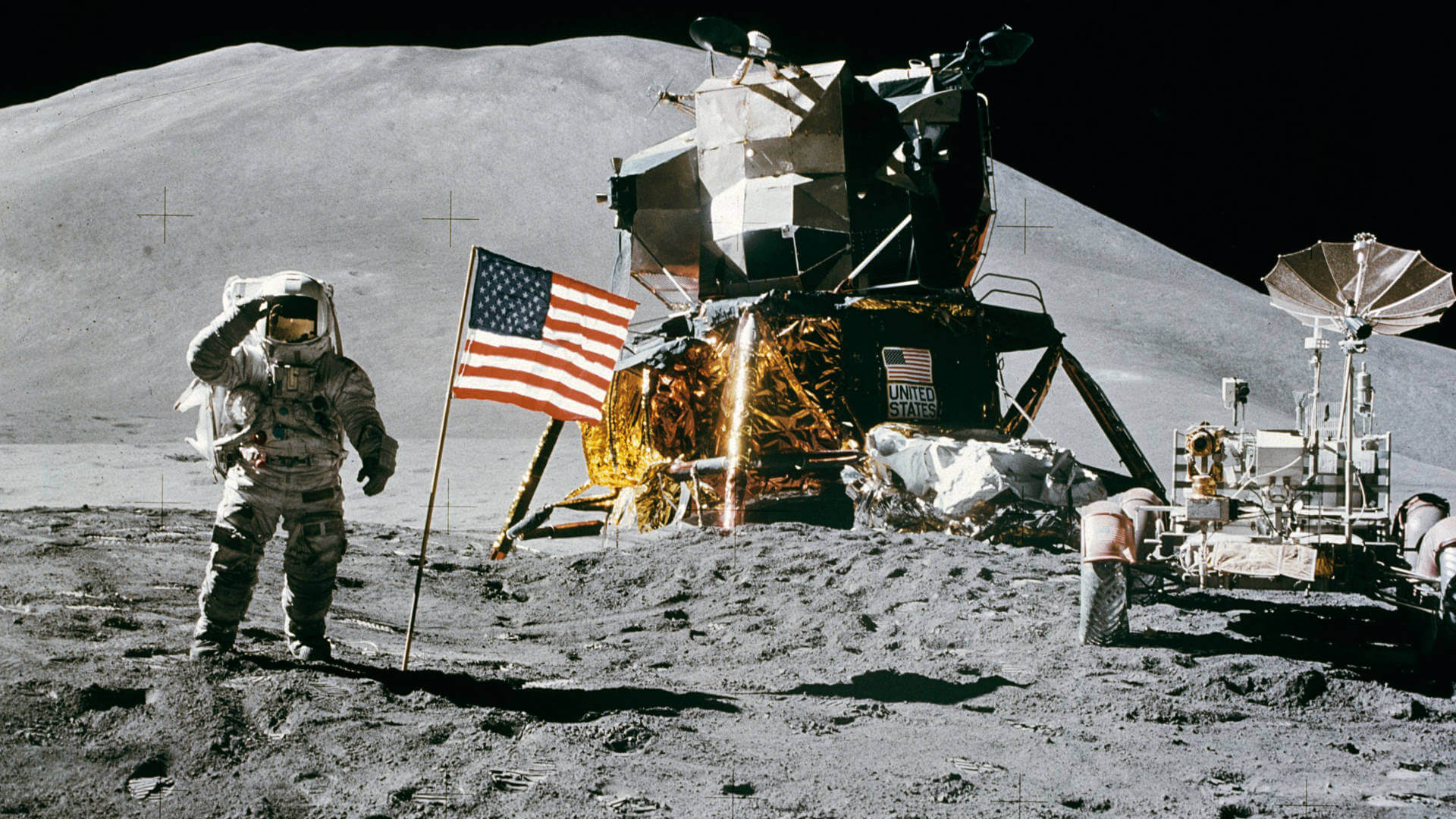

1975, a historical epoch of mankind ends. The US Apollo Mission, launched in 1961, is completed. The race between the USSR and the USA for domination of space was decided. Around the globe, an enthusiasm for technology had broken out. Everything seemed to be possible, and the Apollo Mission was also effective in everyday life. Waste products such as Teflon found their place in the kitchen, Texas Instrument calculators moved into the classrooms where "moon landing" could be played with a simple program.

Even Hollywood was fascinated by space and the revolutionary technologies that emerged from the Apollo program. In 1977 Steven Spielberg thrilled cineasts with "Close Encounters of the Third Kind" and Inspector Columbo investigated Harold van Wyck, played by Oskar Werner, head of an electronics company, who had conceived a "fully technological house".

The first smart home in history in the film. This is how Hollywood imagined the house of the future. Harold van Wyck's wheelchair-bound mother-in-law could open doors, switch lights on and off and more with gestures. The property was of course completely video monitored, a novelty. The surveillance technology and gesture control that Wyck devised later convicted him of his mother-in-law's murder. Smart home and surveillance, Alexa and Co. – again a topic in murder investigations today. "Replay" – a glimpse into the future.

One more question: "What is a gesture, Professor?"

"I have one more question", the legendary sentence of Inspector Columbo, who got to the bottom of things in an annoyingly tormenting way. "What is a gesture?" - seems to be a more scientific question than of practical relevance to human-machine communication. But anyone who wants to teach machines to understand human gestures will soon discover the question has more practical relevance than expected.

Gesture research is a young science. It only established itself in the mid-1990s and is located between linguistics and psychology. It understands gestures as head, hand or whole body movements in connection with language. This means that in the scientific sense, for example, the well-known smartphone "gestures" are not gestures in the actual sense. They lack the linguistic components. This already shows the first problem of human-machine communication in relation to gestures. Currently, it only works via "invented" discrete gestures without a linguistic component. But when it comes to the machine understanding of everyday human gestures – technology is still a long way off.

Gestures viewed analytically.

In order to make machines "real" human gestures "understandable", the time sequence (immanent prerequisites for a gesture) must be considered analytically. Typically, a gesture consists of three to four phases: Preparation - Stroke - Recover - possibly Re-Stroke. An example of a discreet gesture: Suppose someone wants to buy a scoop of ice cream of a variety whose name he does not know.

The communication with the ice cream seller will be similar:

- Preperation: Raise your hand and point to the desired type of ice cream with your index finger outstretched.

- Stroke: The position is held and followed by the verbal component: "this one please".

- Recovery: The hand is lowered.

If the gesture was not understood correctly, e.g. because 25 different sorts of ice cream are lined up and the ice cream seller reached for the wrong sort, the last phase takes place: - Re-Stroke: For example, re-showing in connection with the verbal remark "no this one please".

This simple example alone makes it clear how complex machine understanding of the simplest everyday gestures will be. Gestures that were not invented for computers. A gesture communication in which not the human being adapts to the machine, but conversely the machine must interpret the human being.

The poker face is also an important gesture.

In classical understanding, a gesture presupposes a movement. A gesture is therefore something dynamic, a movement that runs along a time axis and is always concluded with a verbal act. Dynamic gestures occur in the form of "discrete gestures" or "continuous gestures". The former is, for example, pointing to something, a "continuous gesture", for example, performed by a conductor. This all seems to be easily solved by machine.

However, new approaches in gesture research, especially with regard to human-machine communication, have found that seeing only movements as gestures is not enough in Gesture 4.0. People also interpret missing dynamics in the overall context. The 'not answering' to a question can also be a conscious gesture, it can send specific ignorance. Showing a "thumbs-up", for example, is also a gesture that requires neither a movement nor a "stroke" component, i.e. a verbal component, for understanding.

The complexity is increasing - cultural environment, temporal context and more.

If one digs even deeper into the matter of a potential human-machine gesture communication, one encounters a wide range of further problems. Due to the high computing power of smartphones, discrete gestures can already be interpreted relatively accurately by matching them with pattern databases, provided the device has the right "cultural database" available. Gestures that are friendly in Europe can be offensive in the Middle East or Asia. This can still be solved, at least in part, using GPS data and corresponding pattern databases.

The complexity increases further when it is understood that gestures are also subject to cultural and temporal development. To interpret gestures correctly in everyday life, an understanding of the counterpart is required: age, social class, cultural basis and more. A human being sees this, a machine does not.

Multisensor technology and machine learning.

The first steps in human-machine communication have been taken. But nothing more. Classical gesture research assumes that all the experience and sensory organs available to a person to interpret a gesture are a given fact. In human-machine communication this is not the case. To analyse a movement and initiate a pattern database comparison is not enough. The linguistic component must be supplemented and, above all, the machine must know in which situational environment the gesture takes place and by whom and at which location it was performed.

Machine interpretation of real human gestures with all their individual facets requires the analysis and interpretation of an immense data set from different sensors. Time series data from multiple sensors, which must be harmonized and analyzed and interpreted with high performance. The challenges seem enormous but feasible.

Read on: Sensors and interpretation.

Human-machine communication, gesture 4.0 or whatever it is to be called, is one of the burning issues of the future. How well this topic will be solved will, among other things, have a decisive influence on the quality of geriatric care.

This contribution was dedicated to the future topic of gestures in a basic consideration as part of a trilogy. In the following two articles, approaches to sensor technology will be examined, followed by those for analysis and interpretation – Big Data with time stamp.

Contact the author Stefan Komornyik.

https://www.hakom.at/en/news-detail/news/wearables-the-big-data-machines/